The goal of this laboratory exercise is for the image analyst to gain a better understand and experience the collection, measurement and interpretation of spectral reflectance signatures of various surface features. These features include Earth surface and near surface materials that were photographed by satellites. Within this lab we will discuss how to collect these spectral signatures from remotely sensed images and graph them in order to analyze them and verify whether they pass the "spectral separability test".

Methods

In this lab, we will use ERDAS Imagine 2013 to measure and plot the spectral reflectance values of 12 materials and surfaces from a remotely sensed image of Eau Claire County, WI. The following features that we will be measuring can be seen listed below:

1. Standing Water

2. Moving water

3. Vegetation

4. Riparian vegetation.

5. Crops

6. Urban Grass

7. Dry soil (uncultivated)

8. Moist soil (uncultivated)

9. Rock

10. Asphalt highway

11. Airport runway

12. Concrete surface (Parking lot)

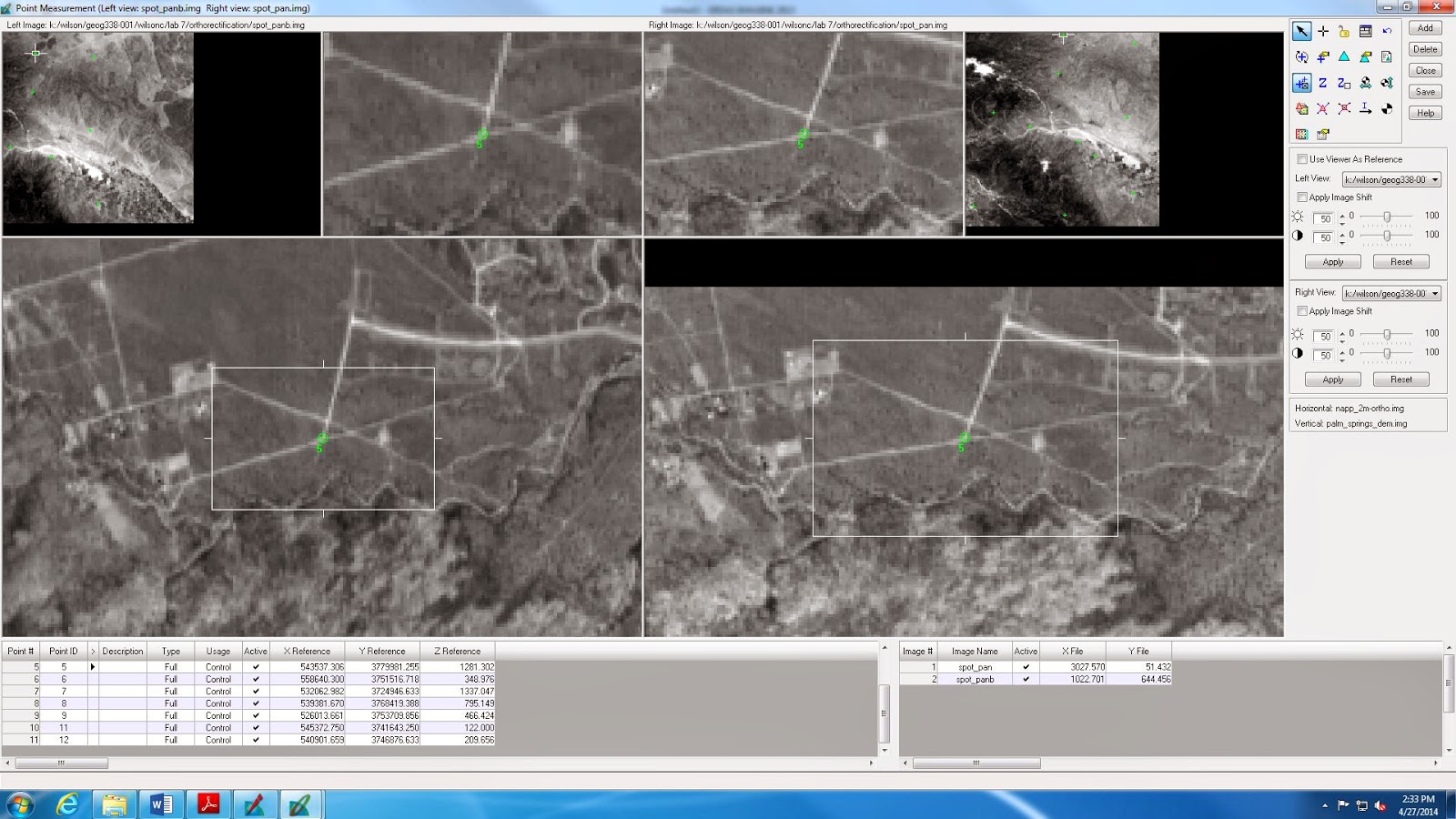

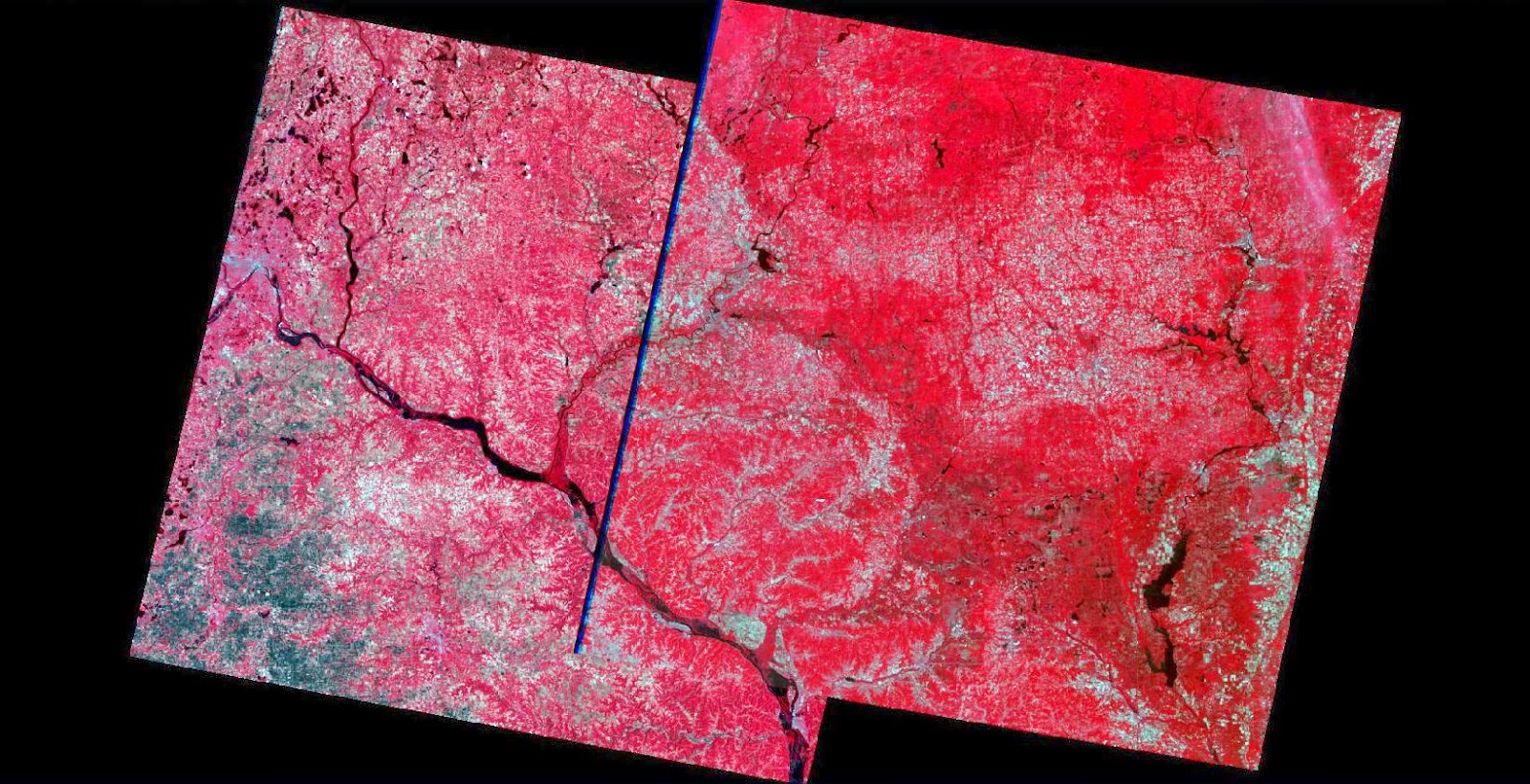

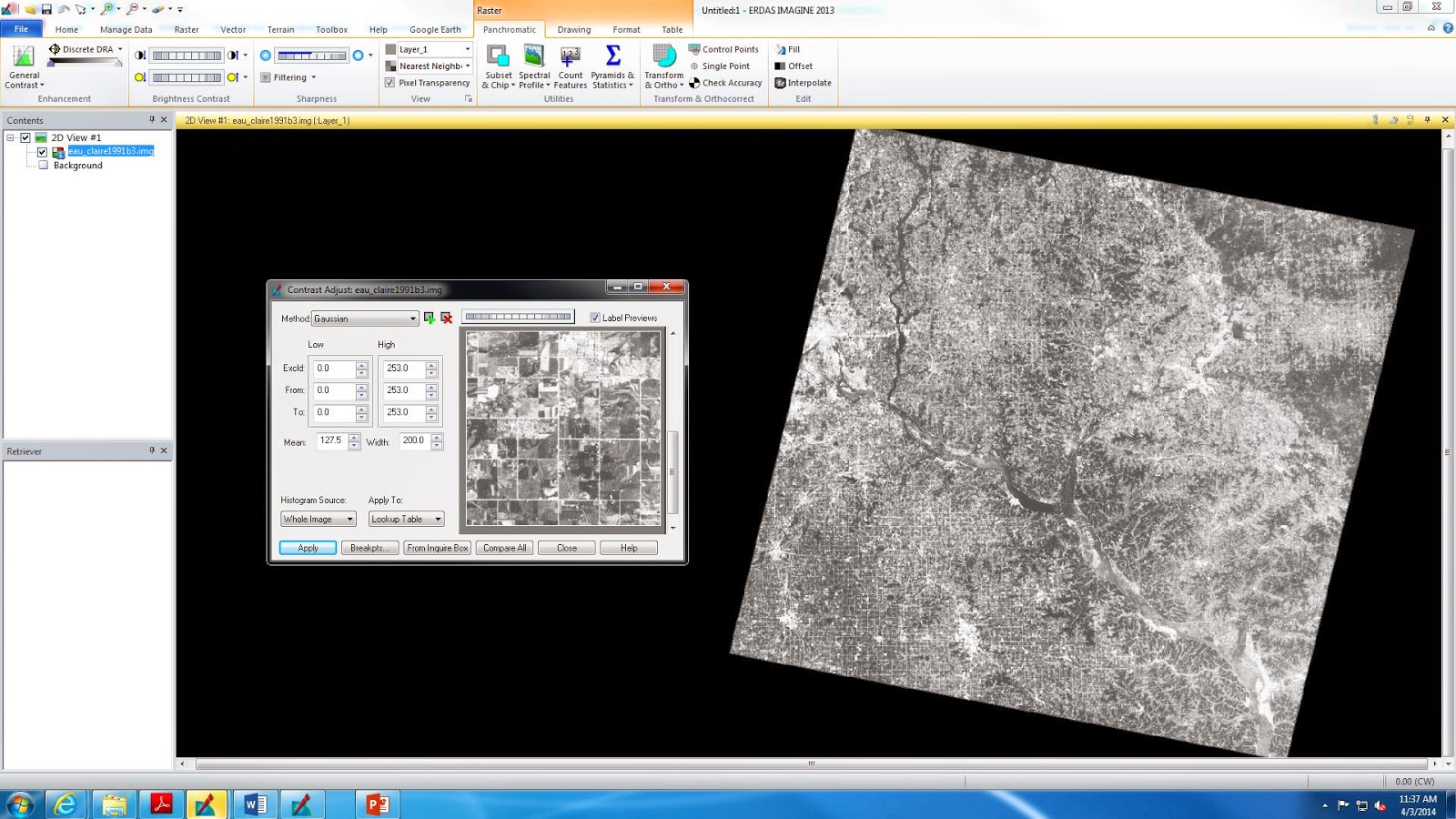

First we will open the image in ERDAS Imagine 2013 and use the drawing tool and outline the area of the feature using the polygon tool (an example can be seen in Fig. 1). Then we must activate the raster processing tools selecting the supervised and signature editor. After a feature is selected by the polygon drawing tool we will then select the option to add the "Create New Signatures from AOI" to add the selected area. Once this is done we can see a more detailed view of the Mean Plot in order to help us identify the signatures. Next all the plots can be combined into one and comparisons can be made based on the values displayed (Figs. 2 and 3).

(Fig. 1) The selected area in the standing body of water can be seen by the polygon in the body of water to the right of the two windows. The window on the left represents the Signature Editor Tool where new signatures can be added. Once the signatures are added they can be viewed in the Signature Mean Plot shown by the window to the right.

(Fig. 2) This image shows the Signature Editor Tool once all the features listed in the above methods section have been added.

(Fig. 3) This graph displays a combined Signature Mean Plot with all the features added to it so the data can be compared and analyzed.

Results

By the end of this lab then those who complete it can collect and as well as analyze spectral signatures for the various types of features in a multispectral remotely sensed image.

Sources

All data used in this lab was provided by Dr. Cyril Wilson. The image of Eau Claire county were collected using a Landsat ETM+ and also a selection of the Twin Cities and was taken in 2000.